We moved one of our legacy internal Rails applications from Heroku, PostgreSQL, and Heroku Scheduler to our Kubernetes platform, SQLite, and some Bash magic. The final result is surprisingly not bad!

Background

SQLite is a small, embedded, fully-featured database that is persisted to disk as a single binary file. It offers a fast, lightweight, ACID compliant database without the need of running a separate service or using network connections. While its intended use was for embedded devices, it has grown over the years to be a super popular alternative to traditional database systems.

At Harvest, we had a handful of internal tools that we were migrating off of Heroku onto our internal Kubernetes infrastructure. One of them used a Heroku PostgreSQL database instance, and I was struggling to decide how best to migrate this application.

Originally, I thought about porting the application to MySQL to match our production database infrastructure, but a coworker suggested that we should take a look at SQLite as an alternative to running yet another MySQL cluster. Assuming that this worked, it would both simplify the application deployment and save us some money by running a lot fewer pods.

This application is an internal-only tool that helps our support team keep track of their tickets and it only had about 30MB of data at the time that it was migrated, so this seemed like a good candidate to experiment with.

The rewrite

Rails has built-in support for SQLite, so it was just a matter of pulling down the SQLite gem, and setting up the database configuration. Right?

Well, not exactly.

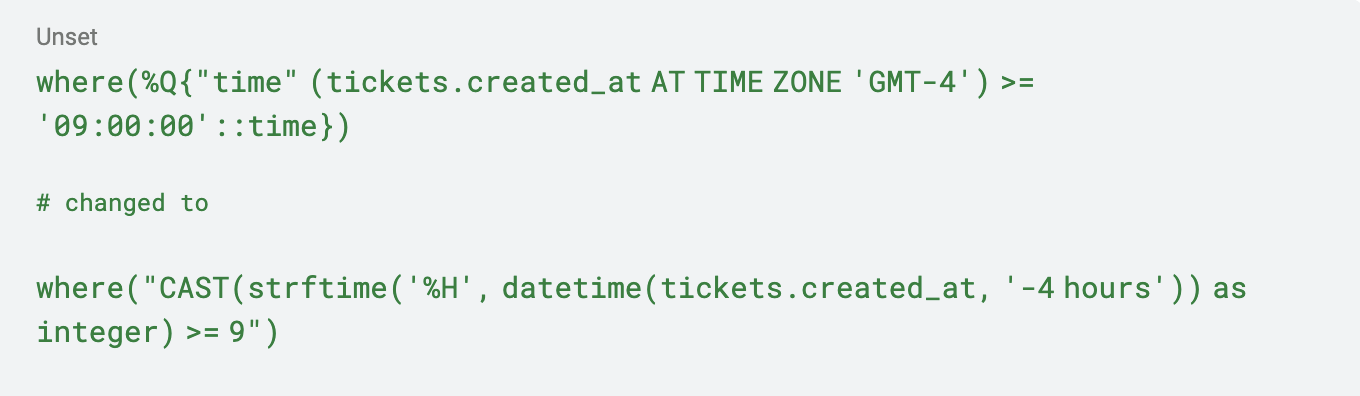

While SQL is a standard, different databases can choose to implement different features in different ways. And this particular application’s queries relied on some PostgreSQL specific date-time functionality that wasn’t implemented in SQLite.

I had to take the following ActiveRecord query and convert it.

While this is some terrible hard-coded date math (that doesn’t take into account daylight savings time), and isn’t the best way to fix the issue, it worked and allowed me to keep migrating. Overall, about 20 or so queries needed to be updated, but thankfully there were unit tests to help me refactor!

Deployment complications

Too many pods

Once I got the application running well on my local development environment, I set out to deploy it to a staging environment. This is where it got interesting.

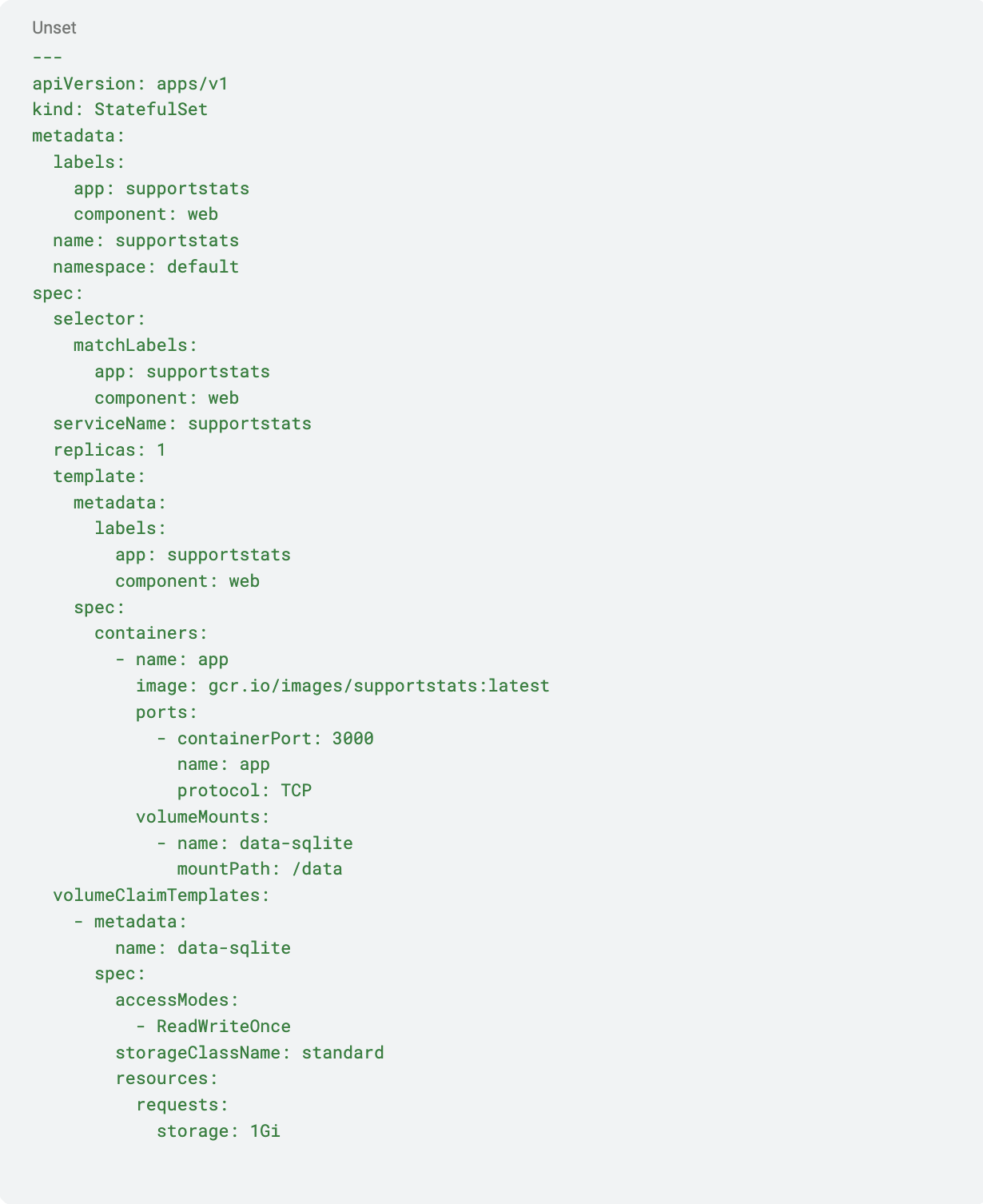

As SQLite “connections” are just opening a file on the filesystem, the database itself needs to be on the container’s filesystem. This means that even if we mount a persistent volume, each pod in a deployment will see a different version of the data. Normally, this would be a deal breaker — but for this application it worked out well in the end.

I ended up just creating a single-replica StatefulSet that has the data mounted so that the application would retain access to all of its data upon pod restart. Here is a simplified version of what I ended up with.

But wait, there’s more!

CronJobs? Why does it always have to be CronJobs?

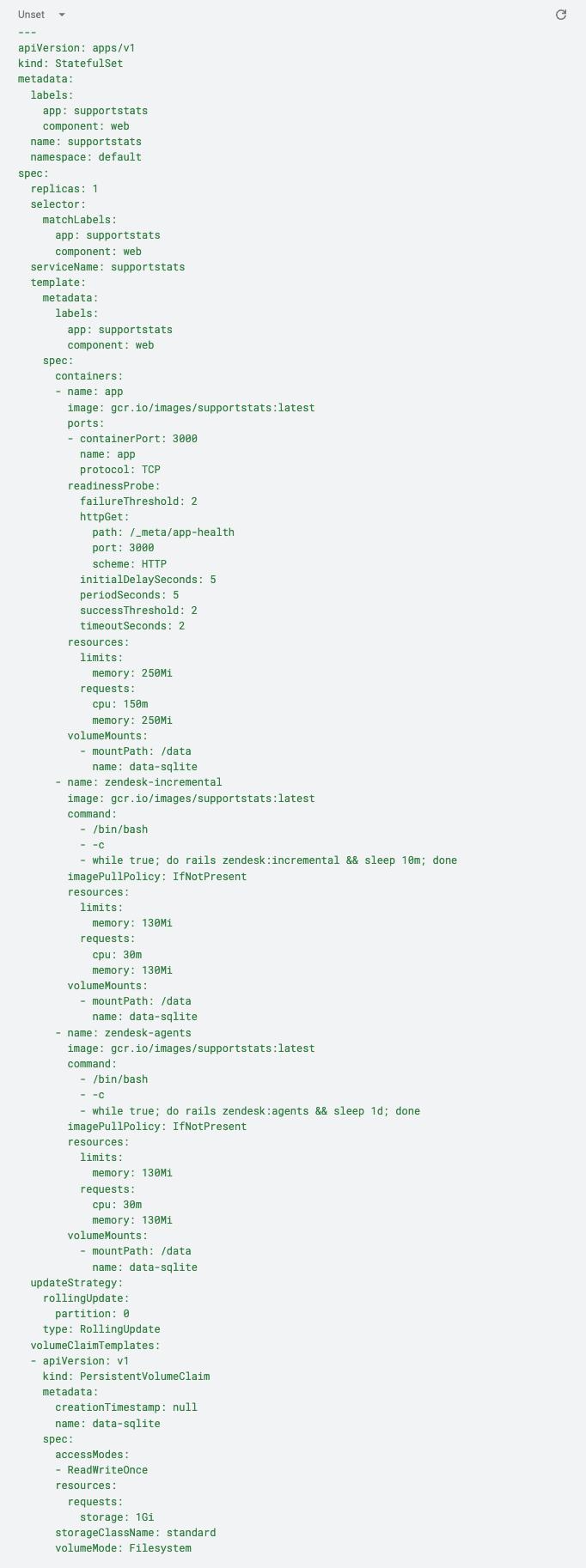

This application also had some periodic data refresh tasks that needed to be run. Previously, these were implemented with a Heroku Scheduler, so I attempted to recreate them with a Kubernetes CronJob, but those create a new pod with each run. Normally, this would be ideal, but we run into the issue where each pod gets its own data. The job would run successfully, and then the data would be lost when the pod terminated. That wouldn’t work, so I had to come up with a new, innovative solution.

Any and all updates have to happen within the same pod, so I created 2 separate sidecar containers that start with the following command:

This command will run the rails task every 10 minutes, sleeping the rest of the time.

It works because all the containers in the StatefulSet pod run with the same volume mounts. It’s essentially just spawning an extra process which has access to the same files and performs the incremental update as expected.

Once this was ready to go into production, I needed a way to seed the database schema and kick off a full data load. Luckily, there was a rails migration that I could run. All I had to do was exec into the StatefulSet’s pod and run the rails database:migrate command.

Downsides to this approach

While this worked for us in this specific use case, there are some downsides to this approach.

The biggest one is that you can’t scale the StatefulSet beyond a single pod. This would be awful if this application was expecting to accept public traffic, but this is a simple internal business-process automation tool that just needs to run, so it works perfectly. Having only one pod means that we sometimes incur downtime during Kubernetes node pool upgrades.

Another downside is the lack of tooling. To “connect” to the database, you need to shell into the running pod and execute sqlite3 commands locally to do any database maintenance if needed. While this works fairly well, it is a bit awkward compared to connecting to a remote database.

Lack of support from other libraries is also an issue. While SQLite is well supported by many programming languages, it often receives less support in terms of ORM libraries. For us, Rails ActiveRecord supported everything we needed, but the level of support is different from MySQL.

Overall, surprisingly not bad!

By doing this migration, we were able to decrease our Heroku cost by around $90/month USD and replace it with a single pod running in our existing kubernetes clusters. If you happen to have five or six similar internal-only tools floating around your environment, this could be a great option to simplify your deployment.

As for performance, the application page-load time decreased by about 150ms. While we weren’t optimizing for speed, it does make the experience of using it a lot better.

It has been running in production internally for several months now and has been rock solid.

Looking ahead, I think it would be interesting to see what this application looks like with something like https://dqlite.io/ to automatically replicate the data across a raft cluster. But that will probably require its own article.

Full solution StatefulSet yaml

1. Reworking the application wasn’t exactly in scope, and I still wanted to see if the idea worked

2. Also known as a hacky workaround.